Reading 7: Safety

This assignment is due on Tuesday, December 3 at 11:59pm. Don’t bullshit.

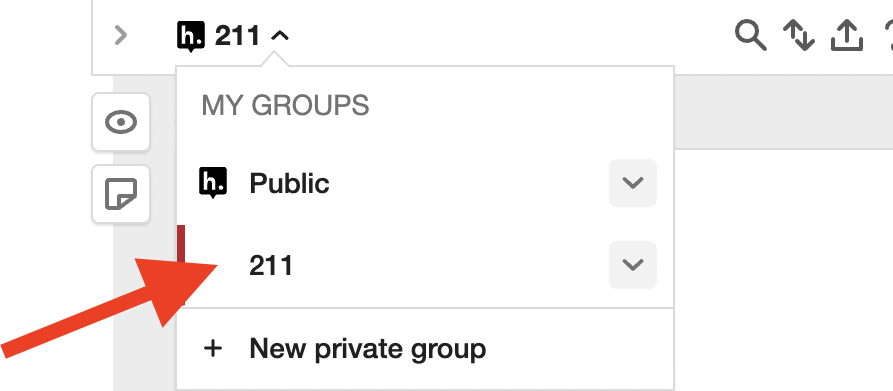

When you follow the link above with your browser, you should see Leveson’s article, as well as a button “<” in the upper-right corner. Use the “<” button to expand the annotation sidebar.

You may need to log in to Hypothesis, using the account you created in Reading 1: Who can define the bigger number?.

**Pay attention to this next step**. This is a common mistake that results in many zeros on the assignment. After logging in, you are not done. You still have to expand the drop-down menu “Public” in the top right sidebar, make sure it says “my groups”, and change it to our course group “211”.

You belong to this group because you used the invite

link in Reading 1: Who can define the bigger number?. If you don’t post to this group, then other students

won’t see your annotations, and you won’t get credit.

You belong to this group because you used the invite

link in Reading 1: Who can define the bigger number?. If you don’t post to this group, then other students

won’t see your annotations, and you won’t get credit.

In the future, when …

I will …

instead of ….

Make your question clear, descriptive, and specific.

Don’t be too brief, terse, or vague. Don’t just say “What’s this” or “I don’t understand”.

Don’t just summarize.

Exercise 4. Once you have added your annotations, respond to someone else.

Optional: read more about Therac-25 in Appendix A of Leveson’s book “Safeware: System Safety and Computers”. Here’s one key good part: